Standards-based grading (SBG) has become my secret weapon for centering my evaluation practices around what matters most in my teaching: growth, learning, and mastery. Here’s how I wield it in my classes.

Look on my works, ye mighty

This fall I’m teaching one section of first-semester abstract algebra to a class of math majors, and one online section of a general-education survey of math for nonmajors. Both are implementing standards-based grading through-and-through, in some slightly different ways. Download PDF copies of the syllabi below; the remainder of this post is dedicated to explaining how these syllabi work and the design goals I had for them.

The visual syllabus

When I began designing a course for SBG, I wanted my syllabus to adequately reflect the difference I hoped it would make to my students’ experience. So when I scrapped traditional grading, I also scrapped my traditional syllabus design in favor of a simplified, four-page visual syllabus. My goal was to make the syllabus both something that students would want to read and interact with, and something they would keep within reach all semester out of necessity — because it would provide them a way to track their progress.

The result was a thin, bifold brochure with a catchy introduction on the first page, a detailed learning plan and progress chart on the inside pages, and a course calendar and abbreviated course policies on the back. (The boilerplate, more detailed academic policies that bloat so many syllabi I posted on the course website instead.)

with a catchy introduction on the first page, a detailed learning plan and progress chart on the inside pages, and a course calendar and abbreviated course policies on the back. (The boilerplate, more detailed academic policies that bloat so many syllabi I posted on the course website instead.)

In the first semester, I didn’t list the learning standards on the syllabus itself. I’ve since made a point to incorporate them into this format so that any stakeholders beyond my classroom who might review my syllabi would have that information.

This change from traditional to visual syllabus neatly demarcates my change from traditional to standards-based grading as you leaf through my course materials over the years. I’m hoping this visual syllabus accomplishes the following things.

Goal 1: Make the standards clear

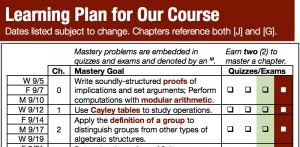

I admire faculty who teach standards-based courses like calculus, where the learning standards can be broken down into fifty or more atomic competencies assessed at ever finer levels of detail. I haven’t tried SBG in those kinds of courses yet, so I’ve tried to keep my standards to a spare list of 3-5 per chapter. These feature prominently on the back page of the syllabus, aligned to the textbook chapters as well as the assessments on which they can demonstrate mastery of them.

I admire faculty who teach standards-based courses like calculus, where the learning standards can be broken down into fifty or more atomic competencies assessed at ever finer levels of detail. I haven’t tried SBG in those kinds of courses yet, so I’ve tried to keep my standards to a spare list of 3-5 per chapter. These feature prominently on the back page of the syllabus, aligned to the textbook chapters as well as the assessments on which they can demonstrate mastery of them.

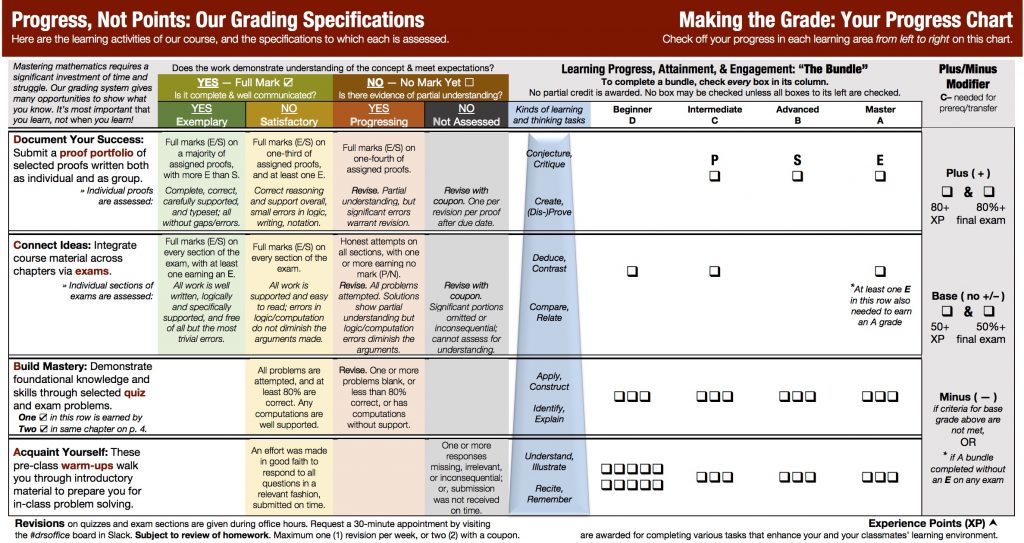

Goal 2: Integrate specifications with assignment types with grades

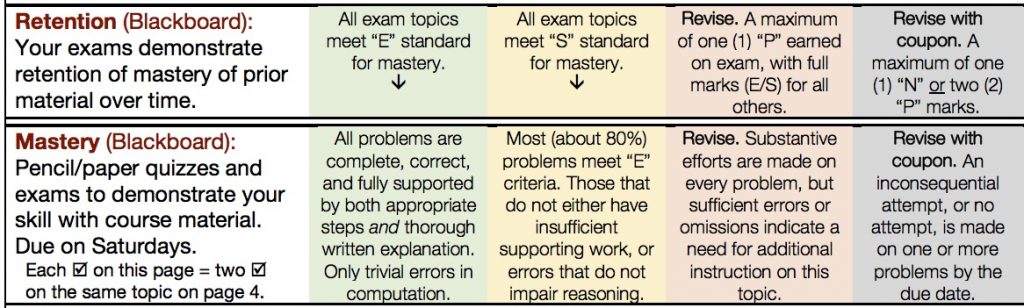

At its heart every syllabus is about answering three questions: What will we learn? How will we learn it? And how will that learning be recognized in our final grade? I’m sure this can be done at length in dozens of pages of prose, but neither my students are interested in reading such a thing nor am I interested in writing it. Instead, I combine Kate Owens’ excellent idea of a color-keyed specifications rubric — with clear descriptors for each level of performance, both those that display mastery (Excellent; Satisfactory) and those that do not (Progressing; Not Assessed) on each type of assignment — with Robert Talbert’s design for a grade “checklist” students may use to track their progress toward earning course grades.

The full chart that results takes up a two-page spread that comprises the heart and soul of my syllabus, and it becomes my students’ Rosetta stone for determining whether they’re on track to reach their goals — or if not, where they should focus their efforts on improving.

This semester, I gilded this lily by incorporating a modified Bloom’s taxonomy into the progress chart, arranging the assignment types in order from those that require mostly lower-order thinking to those that necessitate higher-order learning. This, I hope, helps to justify to students why certain types of assignments mostly unlock passing grades while others unlock high-achievement grades. (I tried to make the checkboxes in the chart all fit a pyramidal pattern to reinforce the metaphor, but in a rare feat for me, I decided it was too visually confounding to be worth doing.)

Also, the names of my levels of mastery now permit me to call this system “ESPN grading.” If it flops, maybe at least I can land an ABC sponsorship for next semester.

Goal 3: Incentivize retention of material over time

One of the hardest lessons of teaching in general is to mind the forgetting curve. Students who master a skill this week may, if their subsequent work does not consistently draw upon it, have lost that skill altogether several weeks later. If those several weeks end during my semester, I can help the student to review and reassess until they are back up to speed. If those weeks end and the student finds themselves in a successor course taught by another instructor — well, they might not get that opportunity. Some SBG implementations can suffer from “chunking” effects — referring to the practice of dividing up high-stakes assessments into several coherent “chunks” delivered one by one that is occasionally prescribed for students with learning disabilities related to memory and recall. How do I ensure that my students not only master key skills, but retain that mastery over the semester (and, hopefully, beyond)?

One option is to preserve the traditional comprehensive final exam. In my first semesters of SBG, I did this, weighting my standards-based bundle grades at 80% of students’ overall mark and the final exam at 20%. This had the advantage of keeping students cognizant of the expectation that they will need to once again demonstrate mastery at the end of the course. However, many students did not perform well on that final exam due in part to the transparency of the “bundle” grading system having assured them before the exam that they’d already sewn up a certain grade that they’re satisfied with.

For example, at the beginning of the semester once the grading system was well enough understood to do so, I assigned students to write a page reflecting on their initial state of knowledge about the course material, and setting a goal for what final grade they would work toward. Traditional grading is tacitly predicated on the notion that every student should always aim for an “A.” (Which goal is understandable to professors who assume that their job is to train up more future professors.) But this exercise opened my eyes to a wider range of student goals under which many said things like “I’ll be happy making a C because I know this is a challenging course and I just need to graduate this spring.” If that student performed well enough to earn a “B”-level bundle during the semester (70 out of 80 points), their C is already guaranteed before the final and so they could afford to punt the comprehensive exam.

Still, if for no other reason than to acquit the SBG approach to colleagues, and particularly colleagues who revere comprehensive high-stakes exams as the gold standard of measuring and improving student learning (CNN chyron: “It Isn’t“), I’m still looking for a better system to track how well students are retaining course material over time. I’ve retained my final exams — but instead of weighting them, which is an anti-mastery-grading approach, I’m taking another cue from Robert Talbert and using them purely as (a) one final opportunity to demonstrate mastery on heretofore un-mastered learning standards, and (b) an opportunity for half-letter-grade improvement. This softens the blow of the high-stakes exam since the “base grade” for each student (A, B, C, D) is determined solely by their mastery throughout the semester while the exam itself only impacts whether that base grade is decorated with a minus, plus, or nothing.

In my abstract algebra course this fall, the cumulative nature of the material lends itself to “built-in” retention checks: students who can capably write a proof about quotient groups mid-semester can easily be said to have mastered the basics about groups earlier in the semester. So for this course, the way I’m using the final exam will suffice.

In my general-education math survey course, though, the material is organized into three units (logic and set theory, function models, and statistical literacy) that are nearly disjoint in their expectations of mastery. So here, I’m using quizzes and exams in a different way.

“Mastery” of an individual topic can be earned on individual quizzes and individual sections of the unit exams. (This version of mastery is therefore somewhat chunked – except that in order to earn mastery for a topic it must be earned on two separate occasions during the semester.) Meanwhile, “Retention” — a separate evaluation component — is awarded for unit exams which demonstrate mastery of all unit topics at once. So for instance, a student might take a Unit 2 exam which assessed standards 5, 6, 7, and 8. If the student earns mastery credit for standards 5, 6, and 8, then these accrue toward the “Mastery” grade. But the exam overall receives a Progressing mark in the “Retention” category, requiring revision of Standard 7 before it receives credit.

Goal 4: Incentivize positive participation behaviors

In another borrowed idea from Robert Talbert, I’ve rolled out experience points (XP) this fall: weekly offerings of token credit for behaviors that contribute to the learning environment, such as presenting work in front of the classroom, contributing to reading discussions online, and so forth.

In the past I included such activities as individual parts of the grading system with their own specifications and rows on the progress chart. That, I think, was responsible for a lot of back-breaking work on my part to assess. So now, I make the tasks simple, obvious for me to check, and with a good amount of variety throughout the course. The XP students earn then combines with their final exam performance in determining the half-grade (+/–) modifier for their base grade.

Here goes nothing

I continue to believe as firmly as ever in the benefits — both to learning and to humanizing my classroom — of using standards-based grading in place of traditional points and percentages. But there are still so many variables I can tweak, gaps and pitfalls that I don’t anticipate until mid-semester, and voices in my head that continue to ask, despite mounting evidence in favor of SBG, “are you sure this is even a legitimate way to teach?”

But those voices get quieter the first day in the classroom which, as I publish this post, is five days from now. Here’s to a successful, standards-based fall.